Everywhere we go, or with every new product launched, there seems to be some AI feature—even when it’s not really necessary. New tools appear daily, while existing ones evolve and improve. But what’s the endgame?

AI is growing exponentially—how do I actually use it?

Work

I started by using AI to create data transformations and generate structures based on example files. This significantly sped up my workflow. While the results weren’t perfect, they usually only needed a few tweaks or additional prompts.

Next came writing code—basic functions like traversing structures, modifying values, or writing specific SQL selects. The usefulness depended on the logic and libraries I needed, but even when the code didn’t work out of the box, it often gave me a solid foundation to build on. This was especially helpful when working in unfamiliar languages.

Something I didn’t expect at first: grammar checking. It’s been a huge help, especially when writing blog posts like this one. Over time, I also started using AI to summarize or verify documents for key points and create project documentation.

Another game-changer: searching through old documentation. Instead of hunting for the right file and page, I can now ask AI to find the relevant section directly.

This feature came later, but it’s one of the best. AI-generated meeting notes aren’t always perfect, but they’re based on transcripts, so I can quickly refine them. This alone has saved me up to 50% of meeting time, freeing me to focus on actual work instead of typing notes in Word or OneNote.

In short, Copilot AI has given me the equivalent of 1–2 extra hours per day—for free.

Fun

AI isn’t just for work. I’ve always dreamed of using it for creative projects I never had time for. Now, that dream is becoming reality.

Image and Video generation

I love drawing—like the bear image at the start of this post—and I’ve always hoped AI could help refine my art or even create short animations.

Just 4–5 months ago, I made a video on my YouTube channel explaining how far off we were. Quite far, nothing really worked as expected, but now? Things have changed dramatically. I’m so excited about this that I’m planning a follow-up video. I can’t wait to try Veo 3 (though sadly, I’m not in a supported country and don’t want to use a VPN).

I’m currently working on an AI-generated short video, which I’ll cover in a separate post. Due to generation limits, it might take a while.

Music

This was my second most anticipated feature. Unfortunately, Gemini Pro doesn’t include Lyria 2, so I turned to Riffusion. The results are decent, and I’ll be posting them on my AI-focused YouTube channel.

Research

With a Master’s in Computer Science, I’ve always wanted to explore topics outside my field. Thanks to Gemini AI’s DeepResearch, I can now access far more sources than I’d have time to read manually. It’s helped me not only advance existing research, by discovering new sources or summing up long documents for me, but had also a great deal in starting entirely new topics.

I’ll be publishing my findings either on my website or Google Drive. Of course, you still need to double-check the sources, but it’s a great first filter.

Games and webpage

I wanted to enhance my websites—not this WordPress blog (which basically runs itself), but others like Dreambook and a new subpage: ai.oldwisebear.com.

This subpage was generated with a single prompt in Gemini Canvas and then customized. Normally, it would’ve taken me 1–2 hours (I’m not great with HTML/CSS), but this took just minutes.

I also started building a puzzle game in Gemini Canvas. The first prompt was as following: gave me a simple but functional text adventure game:

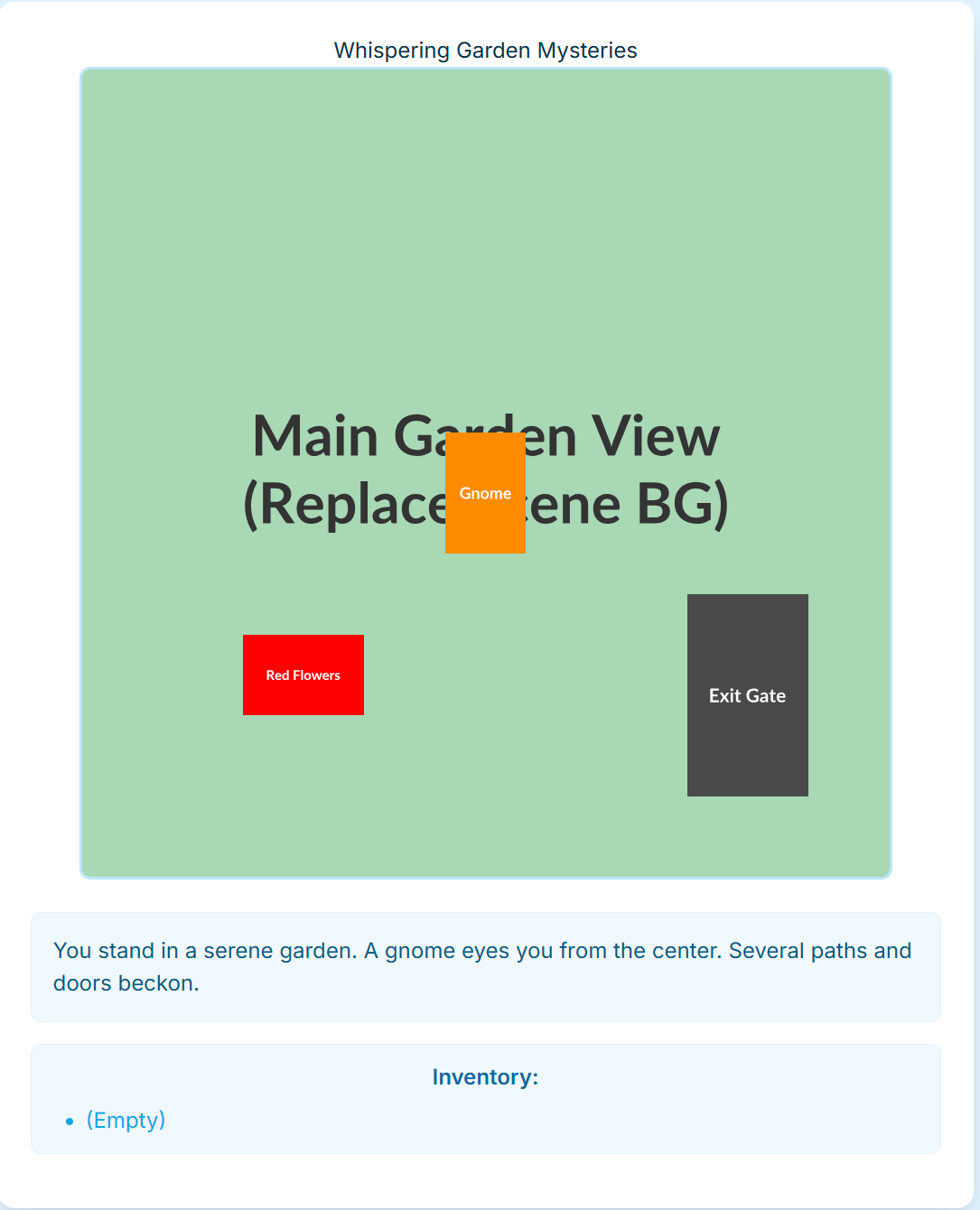

Design me a webpage javascript game. It should be a puzzle game that will be solved by doing action in correct order - gathering items, defeating enemies etc.And gave me a simple but functional text adventure game. Meaning I could get some items, interact with various objects and finally exit the garden to complete the game.

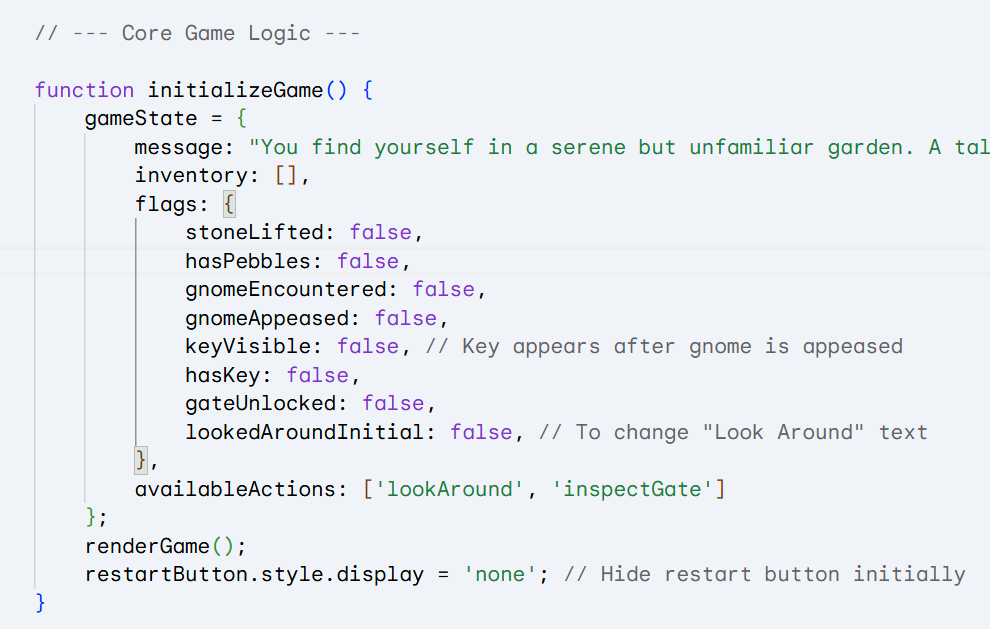

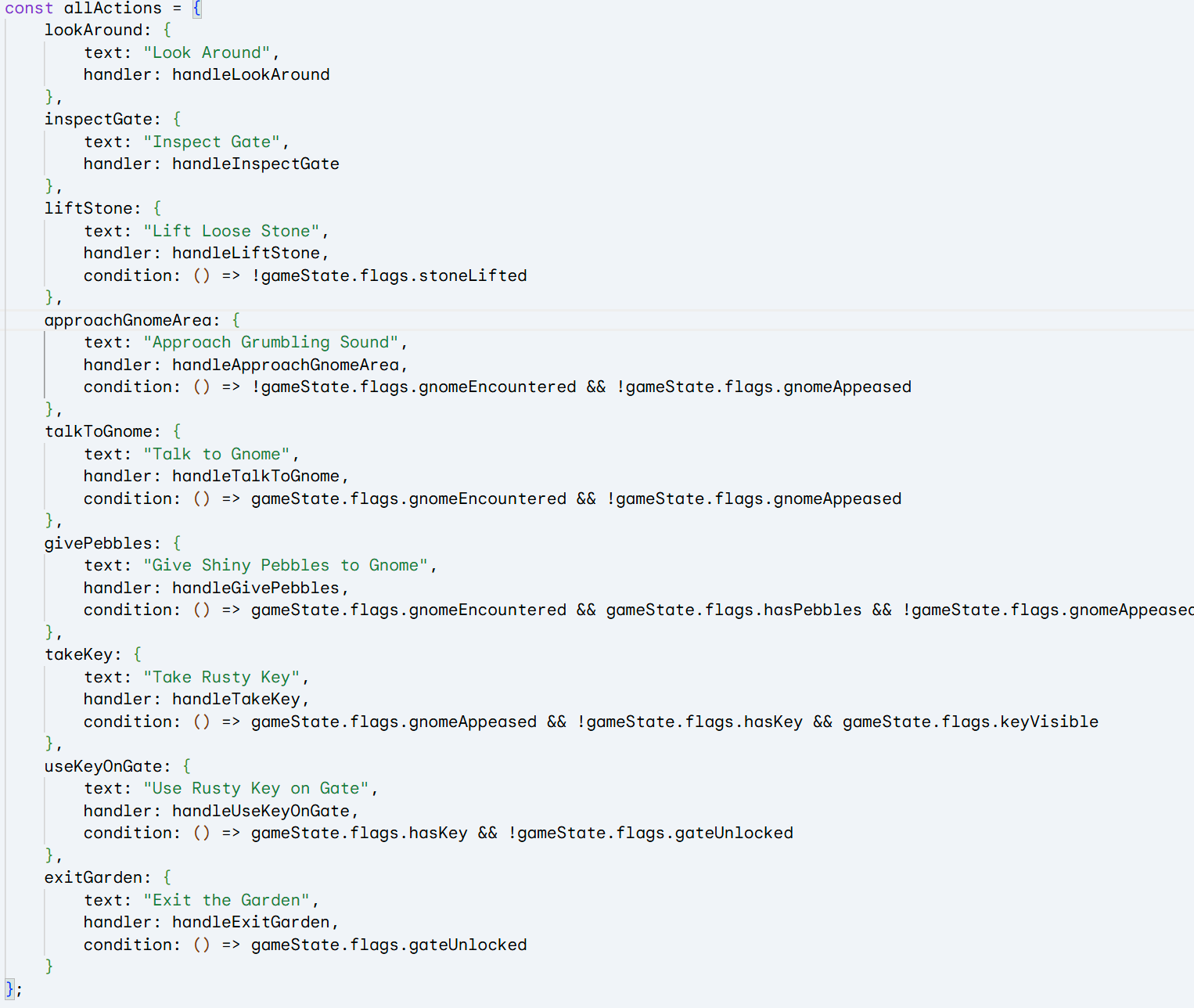

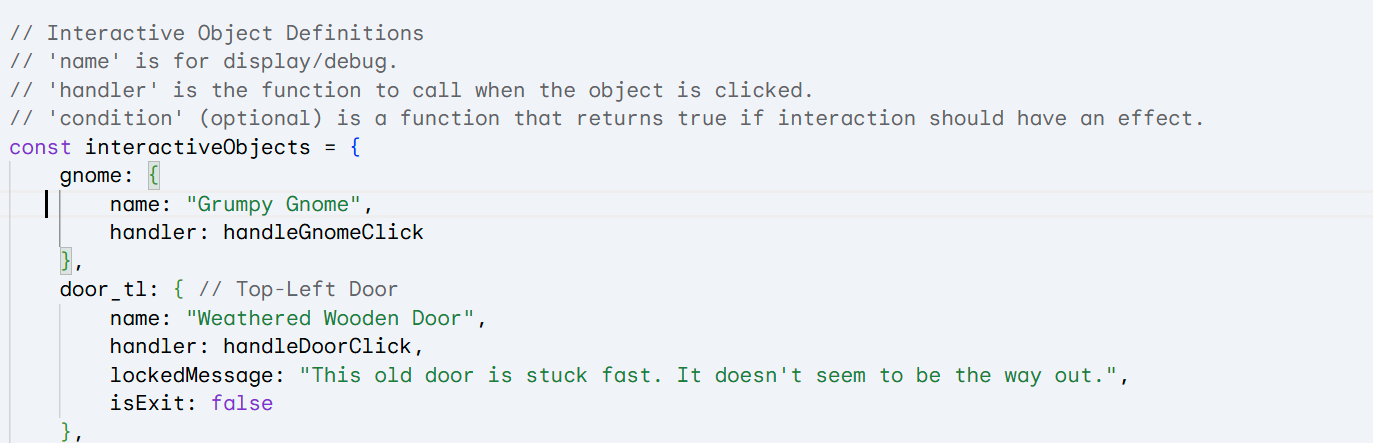

How was the code of this version? The initial logic was basic—flag-based—but it worked.

That wasn’t exactly what I wanted to, so I refined it with further prompt:

Can you design it in such way that you have to click on the objects.

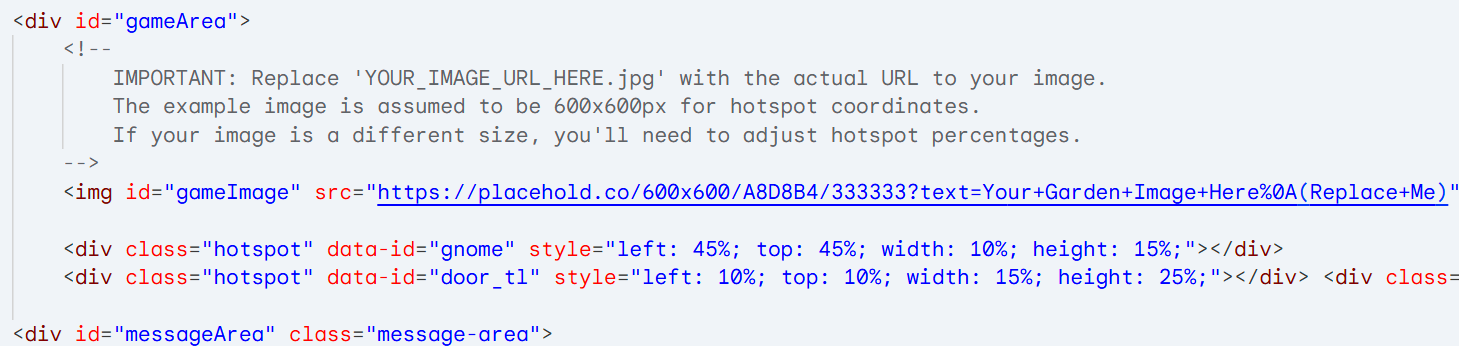

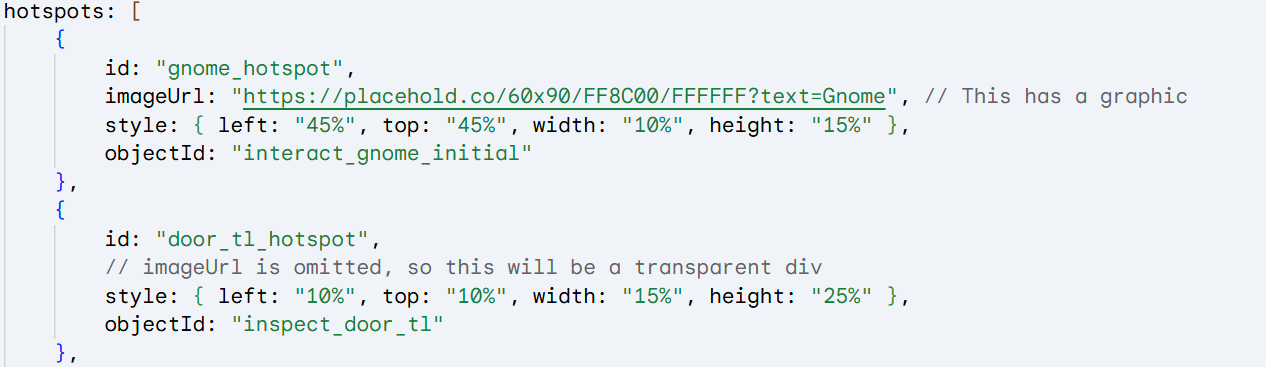

At this stage, all objects were grouped into a container, each with its own assigned functions and logic. However, this prompt unintentionally simplified the game too much. The logic became confined to a single screen, with all objects hardcoded directly into the page—eliminating the possibility of switching between scenes or expanding the gameplay.

Since I wasn’t precise enough with my previous prompt, I added a follow-up to clarify my intent.

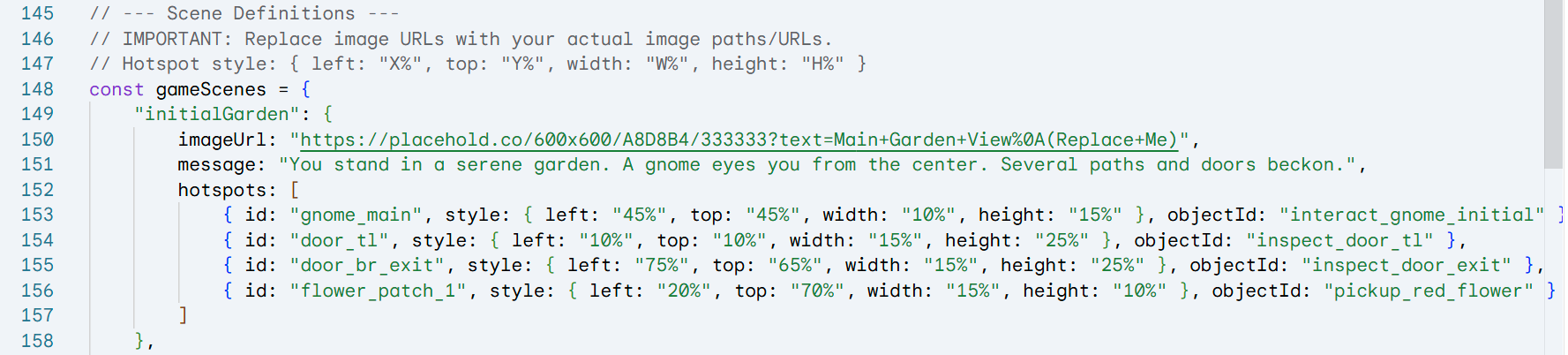

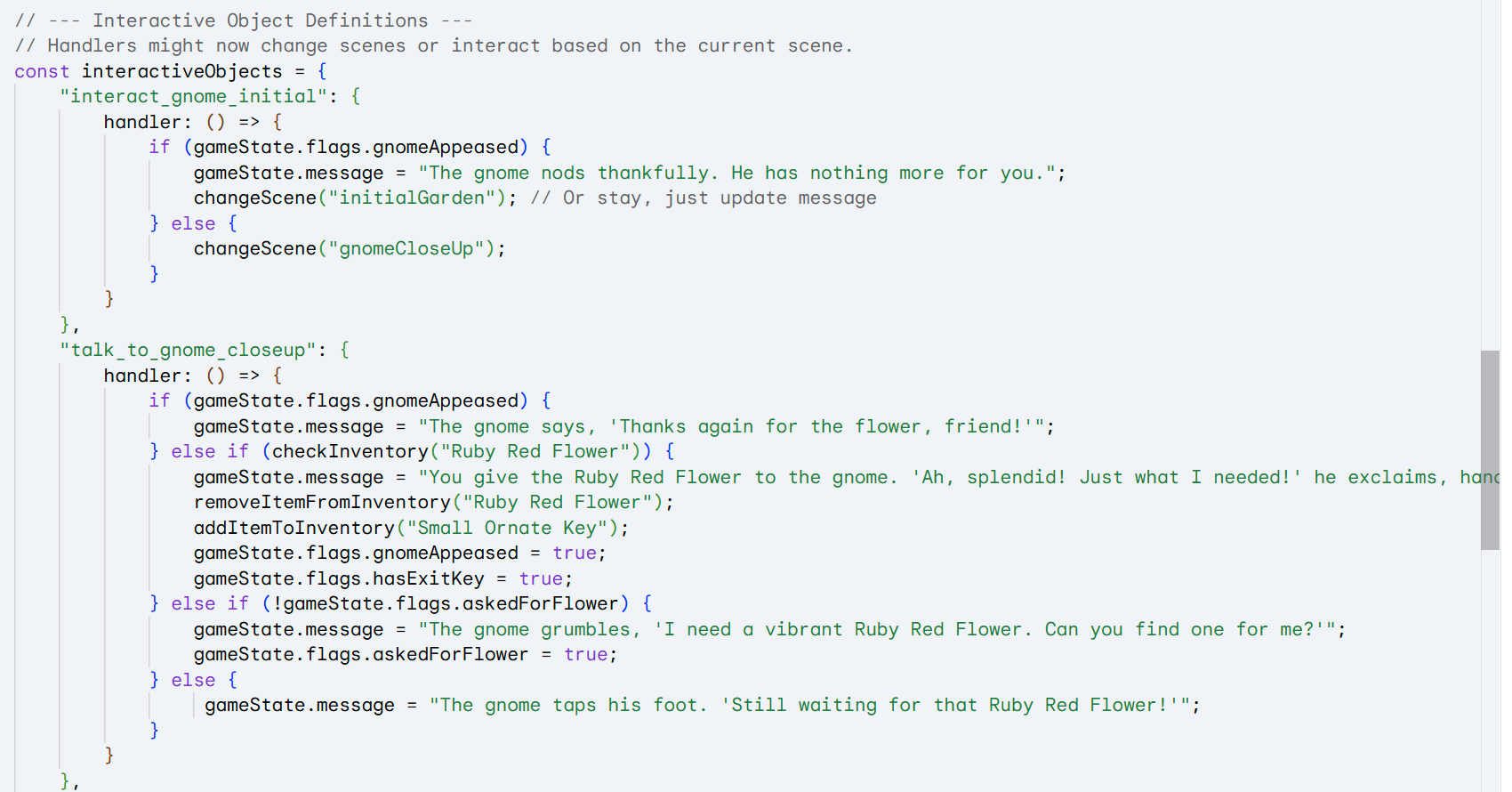

Would it also be possible for some of the interactive objects to change the screen and objects that might be clicked?This resulted in the first version that truly felt like the real deal—something much closer to what I had envisioned. While there were still a few adjustments needed, Gemini provided a solid layout that made future expansion and modifications much easier.

Each scene now had its own list of clickable objects, and each object came with a defined set of actions and messages for the player. It finally felt like a proper foundation for a more complex and interactive game.

With my final prompt, I requested optional graphics for each hotspot. The idea was to avoid generating highly specific images or layering objects manually.

Each of the hotspots should have it own graphics too. If there is no ImageURL provided the hotspot should be just transparent so we can see the background but still clickable.Each hotspot was now designed to support its own graphic. If no imageURL was provided, the hotspot would remain transparent—allowing the background to show through while still being clickable.

The result was once again quite satisfactory. I could interact with all the objects, each had its own image placeholder, and nothing broke in this iteration (aside from a missing border on the transparent hotspots, which was an easy fix with a quick copy-paste from the previous version). The code structure was also exactly how I would have designed it myself, with a new object dedicated to storing image URLs.

With this setup in place, I moved on to full customization. I could have asked the AI to place the assets directly, but I found that approach counterproductive—especially since it often struggled to position the assets exactly where I wanted them.

Instead, I plan to restructure the code by dividing it into separate functions and files, which should make future development much smoother. That’s something I’ll cover in Part 2.

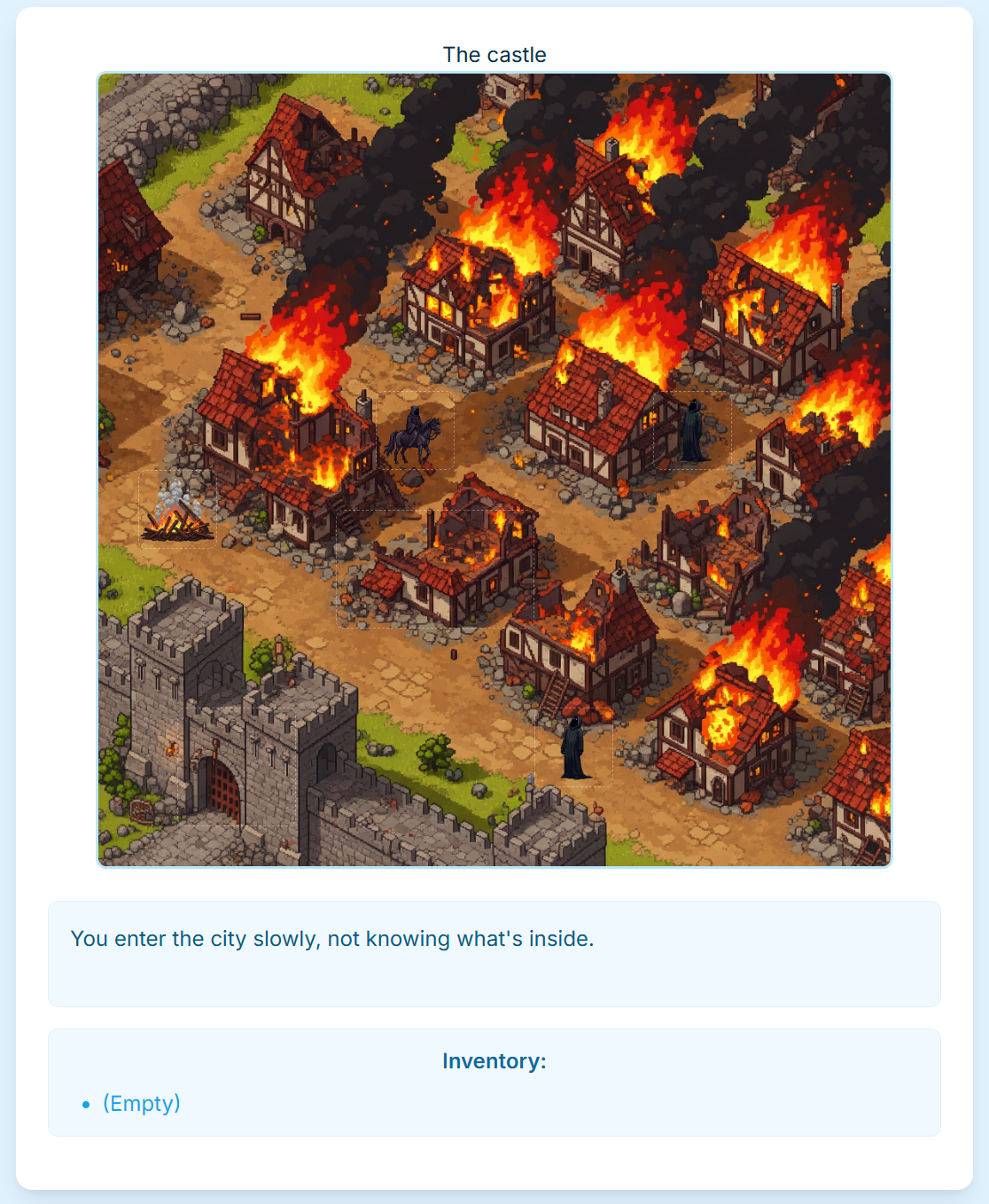

All of the assets were generated using prompts in a separate Gemini chat, and the final result looked like this:

It’s available online if you want to try: https://ai.oldwisebear.com/Game1/game1

What’s next

This was just a demo. I plan to expand it with:

- Background and object animations

- Hotspots that appear after certain actions

- Multiple actions per hotspot

- Music and sound effects

- Default text for unhandled actions

However what to take from all of this:

- AI can now build simple websites and game skeletons with a single prompt.

That’s a huge time-saver. - Prompts don’t need to be overly specific.

The code is well-commented and easy to customize. - Asset generation (especially in Gemini) still struggles with consistent art styles.

More testing is needed here. - Preliminary research is very doable.

Just remember to verify your sources.