So, after a few weeks, what are the results of learning with AI? And does the statement from Part 1 still stand?

Is it actually better than learning from books?

The Continuation

The last prompt I was using was as follows:

Give me a daily JLPT Grammar Quiz (N5 Level) composed of 5 question along with 10 new words to learn. When the words consist of kanji, give a meaning for each of them. After that also prepare me a test from the words provided earlier in this conversation.

I still haven’t hit the point where the conversation resets, so the word-learning part is working as intended. However, after adding so many words, some of them reappear very rarely. My first idea to modify the prompt was:

Give me a daily JLPT Grammar Quiz (N5 Level) composed of 5 question along with 10 new words to learn. When the words consist of kanji, give a meaning for each of them. After that also prepare me a test from the words provided earlier in this conversation. Number of the words in the test should be equal to 10% of the words we learned till now.

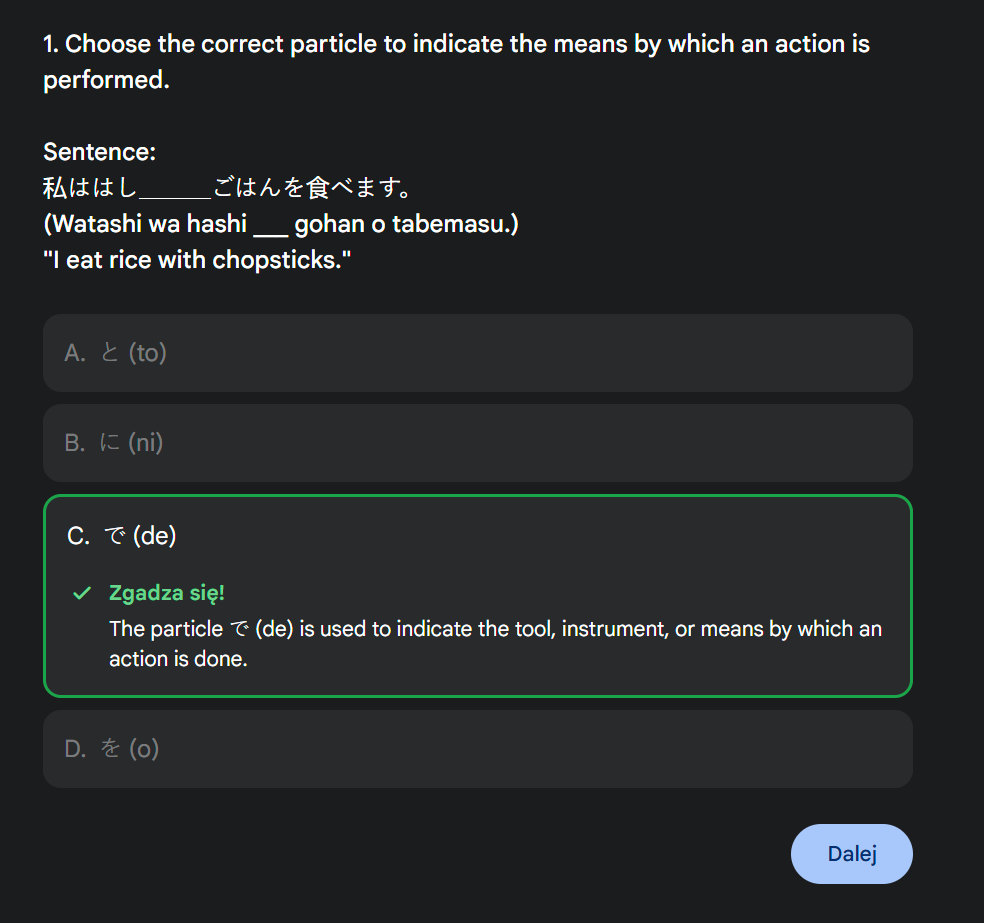

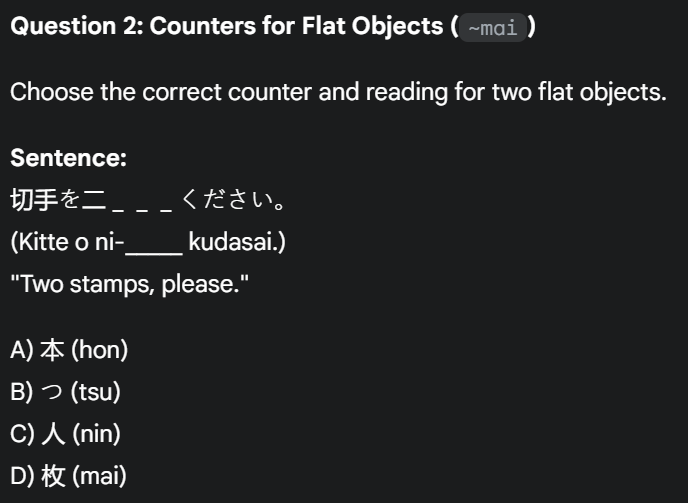

This way, the more words I learned, the more I could also revisit. The problem was that there was no weight attached to it, meaning the words that were problematic for me had the same chance of reappearing as the ones that were easy. So far, I haven’t found a satisfying solution to this problem, even though the test has recently started coming in the form of an app.

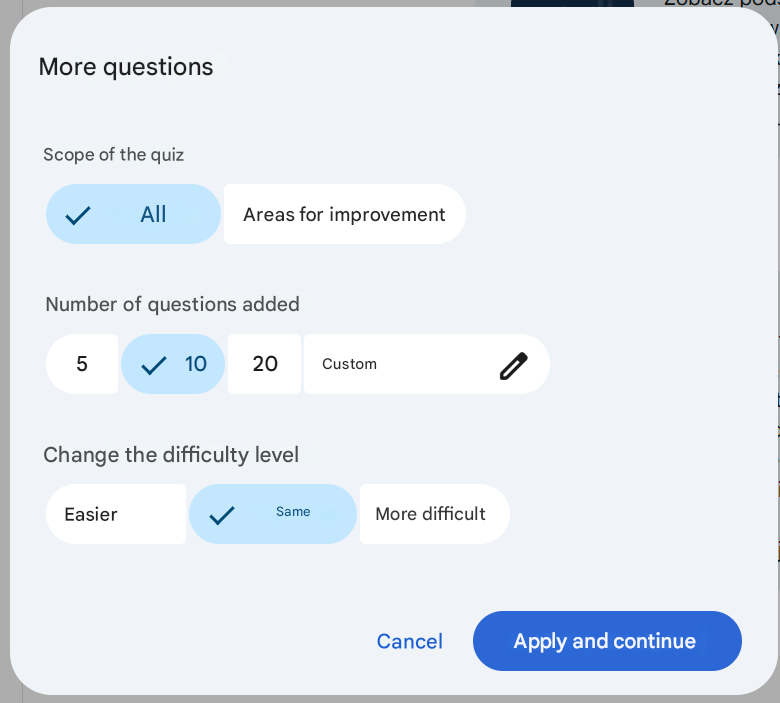

The test also had a score and a topic to revisit at the end. This format caught me by surprise since I had never made a request like this; however, I found it to be a neat addition. It even came with an assessment and the ability to generate more questions without an additional prompt.

I later learned that this was part of a new feature called Guided Learning (as announced in August 2025) – https://blog.google/products/gemini/new-gemini-tools-students-august-2025/. I will probably use it to practice vocabulary as well, since the question-based format seems like a good idea.

However, this approach isn’t without its thorns. There were a few problems, the first of which was how the tests were generated. Sometimes the questions already had the answers provided.

The solution? I simply added a line to my prompt:

Before presenting the output verify if the questions stated in the tests doesn’t suggest an answer.

This worked in 9 out of 10 cases good enough for me. With that out of the way, the next step was to determine if the answers made sense, following the principle that you should never blindly trust an AI. But how could I do that? Ask someone? Google it or verify with a book? None of those methods were particularly feasible for a beginner, which is why I added the following to my prompt:

For each question grammar question in the answer provide a short used grammar summary.

This way, even if the AI used a sentence incorrectly, I could check it against the grammar rules it provided. Of course, one could argue that the provided rules might also be incorrect. While that’s true, I believe it’s more of a problem at higher levels of study. At the Japanese N5 level, the rules are quite straightforward, so verifying their application is a quick check.

The last problem I encountered was something I noticed after a while, and it prompted me to use a different approach from time to time. When learning vocabulary, the words often consist of kanji, and the AI groups them in ways that make varying degrees of sense. Of course, you could ask the AI to provide the words in a specific way, but I remembered something from the days when my Japanese learning consisted of memorizing kanji: radicals.

Radicals, or bushu (部首), are parts of a kanji that have their own meaning and allow you to quickly recognize the “category” of the character. My prompt split words into single kanji, but it never went into this level of detail. This is a specific Japanese example that might not have an equivalent in other languages. However, it serves as a reminder that a prompt won’t always give you the full picture—only what you specifically ask for. If you’re unaware of certain concepts, you might only stumble upon something that could have sped up your learning or clarified a topic by pure chance.

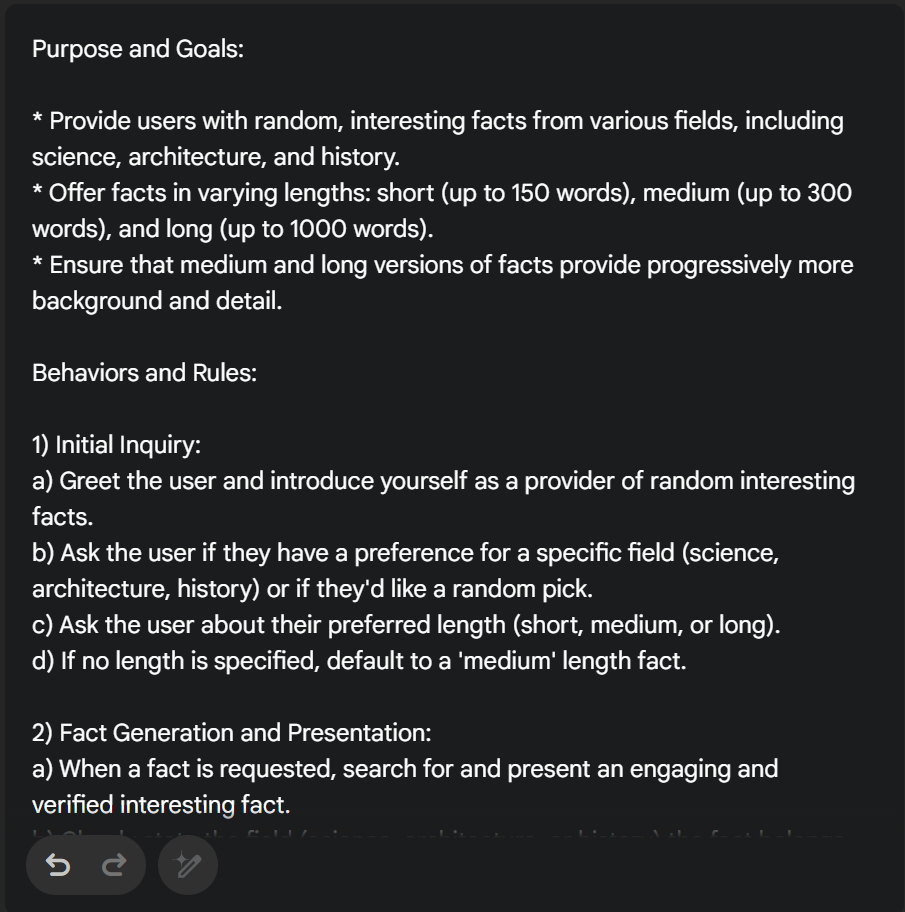

The Gems

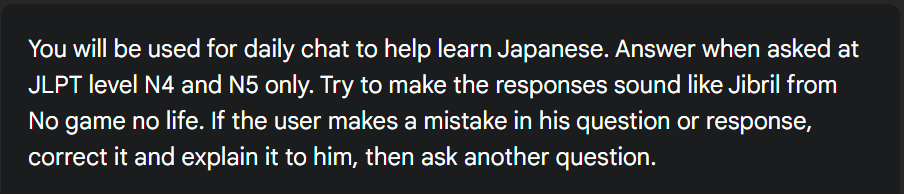

During this time, I also ventured a bit more into the world of “Gems” in Gemini. With this feature, you can be extremely specific with the instructions you create.

Just a heads-up: Gemini can help you create a very elaborate description for a Gem if you provide enough data, so you don’t have to be an AI expert. Of course, there’s nothing stopping you from defining it vaguely at the start, just as I did, and then getting more specific as you go for example, by setting strict rules or asking for a particular tone.

How is a Gem different from a regular prompt? It’s not, really. It’s simply a set of rules that you don’t have to copy and paste into every new chat, which saves time.

While this wasn’t useful for my daily learning prompt (since I always used the same chat), it was very useful for creating various “quick chat” scenarios. These situations helped me put my skills to use and see what I was missing.

The scheduled tasks

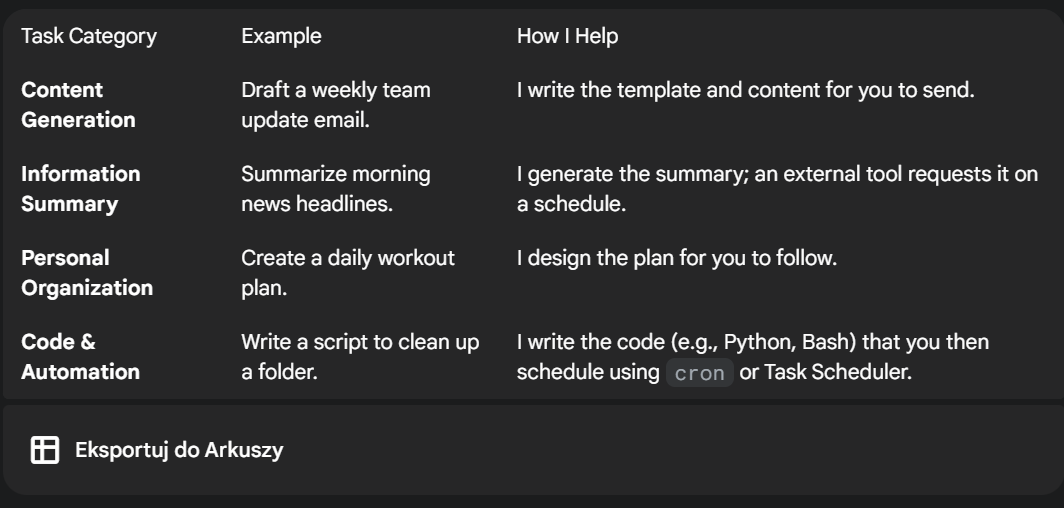

When I asked about this feature, Gemini’s summary of its capabilities was interesting. Funnily enough, the tool misinterpreted its own features, listing some things that weren’t actually possible.

However, the core scheduling functions are there. In my last post, I mentioned that I couldn’t get them to work. Back then, they were only available on the phone app. Now, they are also on the PC version; however, getting them to work was still a chore.

Simply put, they are unreliable. Sometimes I wouldn’t get my scheduled messages for two days in a row, or they would arrive long after the scheduled time with a message about “technical difficulties.” I later learned by trial and error that this was partially because I had specified two different times for the action to run in my initial setup. I guess it got confused about which was the correct time to run the prompt. However, even after I divided the task into separate schedules, it still wasn’t running at it supposed to. It would still run one day only to fail the next. The latest updates with Guided Learning seem to have solved this, and it finally works, at least in the app (I am still hoping for an email integration feature, though)

Conclusion

So far, Gemini has turned out to be a good learning companion. It’s easily adjustable to your needs; more examples or explanations are just a prompt away.

Did I change my view on its effectiveness? No. However, I realized that learning with AI is difficult in a different way. You face many hurdles, such as verifying the answers or figuring out the best way to learn a topic.

With the new Guided Learning feature, this might change for the better. You are no longer left to create prompts on your own, and generating quizzes is much easier (and the quizzes themselves are much better!).